Completely not there versus almost not there.

Picture taken by Stephan DelangeIn my last post where I tried to quantify the concept of "discernibility" I left off at the point where I said I was going to try out my "50/50" definition on the PINQ implementation of differential privacy.It turned out to be a rather painful process. Both because I can be rather literal-minded in an unhelpful way at times and because it is plain hard to figure this stuff out.To backtrack a bit, let's first make some rather obvious statements to get a running start in preparation for wading through some truly non-obvious ones.

Crossing the discernibility line.

In the extreme case, we know that if there was no privacy protection whatsoever and the datatrust just gave out straight answers, then we would definitely cross the "discernibility line" and violate our privacy guarantee. So if we go back to my pirate friend again and ask, "How many people with skeletons in their closet wear an eye-patch and live in my building?" If you (my rather distinctive eye-patch wearing neighbor) exist in the data set, the answer will be 1. If you are not in the data set, the answer will be 0.

With no privacy protection, the presence or absence of your record in the data set makes a huge difference to the answers I get and are therefore extremely discernible.

Thankfully, PINQ doesn't give straight answers. It adds "noise" to answers to obfuscate them.

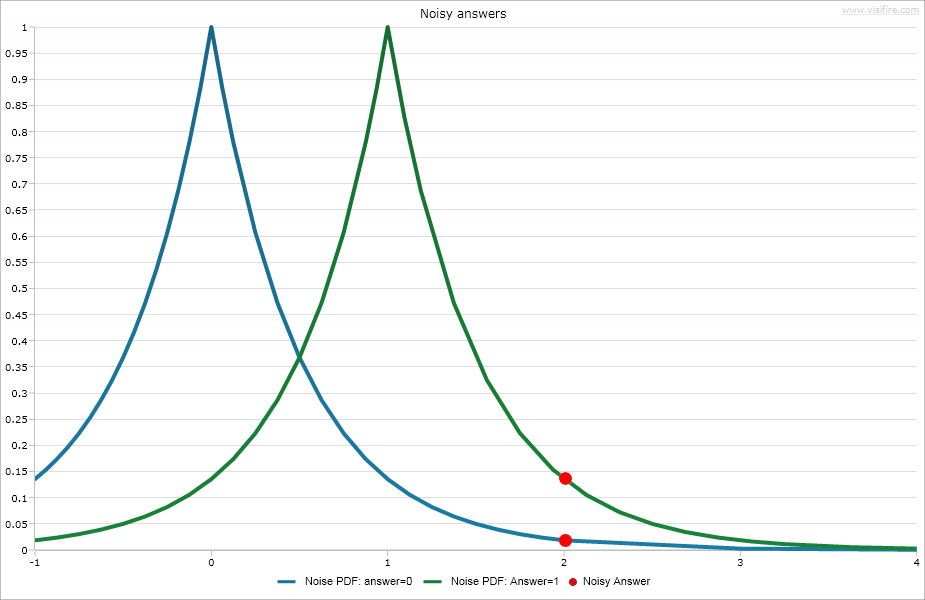

Now when I ask, "How many people in this data set of people with skeletons in their closet wear an eye-patch and live in my building?" PINQ counts the number of people who meet these criteria and then decides to either "remove" some of those people or "add" some "fake" people to give me a "noisy" answer to my question.How it chooses to do so is governed by a distribution curve developed and named for the French marquis Pierre-Simon La Place. (I don't know why it has to be this particular curve, but I am curious to learn why.)You can see the curve illustrated below in two distinct postures that illustrate very little privacy protection and quite a lot of privacy protection, respectively.

- The point of the curve is centered on the "real answer."

- The width of the curve shows the range of possible "noisy answers" PINQ will choose from.

- The height of the curve shows the relative probability of one noisy answer being chosen over another noisy answer.

A quiet curve with few "fake" answers for PINQ to choose from: A noisy curve with many "fake" answers for PINQ to choose from:

A noisy curve with many "fake" answers for PINQ to choose from:

More noise equals less discernibility.

It's easy to wave your hands around and see in your mind's eye how if you randomly add and remove people from "real answers" to questions, as you turn up the amount of noise you're adding, the presence or absence of a particular record becomes increasingly irrelevant and therefore increasingly indiscernible. This in turn means that it will also be increasingly difficult to confidently isolate and identify a particular individual in the data set precisely because you can't really ever get a "straight" answer out of PINQ that is accurate down to the individual.With differential privacy, I can't ever know that my eye-patch wearing neighbor has a skeleton in his closet. I can only conclude that he might or might not be in the dataset to varying degrees of certainty depending on how much noise is applied to the "real answer."Below, you can see how if you get a noisy answer of 2, it is about 7x more likely that the "real answer" is 1, than that the "real answer" is 0. A flatter, more noisy curve would yield a substantially smaller margin.

But wait a minute, we started out saying that our privacy guarantee, guarantees that individuals will be completely non-discernible. Is non-discernible the same thing as hardly discernible?

Clearly not.

Is complete indiscernibility even possible with differential privacy?