Open Graph, Silk, etc: Let's stop calling it a privacy problem

The recent announcements of Facebook’s Open Graph update and Amazon Silk have provoked the usual media reaction about privacy. Maybe it's time to give up on trying to fight data collection and data use issues with privacy arguments.Briefly, the new features: Facebook is creating more ways for you to passively track your own activity and share it with others. Amazon, in the name of speedier browsing (on their new Kindle device), has launched a service that will capture all of your online browsing activity tied to your identity, and use it to do what sounds like collaborative filtering to predict your browsing patterns and speed them up. Amazon likens what Silk is doing to the role of an Internet Service Provider, which seems reasonable, but since regulators are getting wary of how ISP's leverage the data that passes through them, Amazon may not always enjoy that association.EPIC (Electronic Privacy Information Center) has sent a letter to the FTC requesting an investigation of Facebook's Open Graph changes and the new Timeline.I'm not optimistic about the response. Depending on how the default privacy settings are configured, Open Graph may fall victim to another "Facebook ruined my diamond ring surprise by advertising it on my behalf" kerfuffle, which will result in a half-hearted apology from Zuckerberg and some shuffling around of checkboxes and radio buttons. The watchdogs aren’t as used to keeping tabs on Amazon, which has done a better job of meeting expectations around its use of customer data, so Silk may provoke a bit more soul-searching.But I doubt it. In an excerpt from his book “Nothing to Hide: The False Tradeoff Between Privacy and Security” published in the Chronicle of Higher Education earlier this year, Daniel J. Solove does a great job of explaining why we have trouble protecting individual privacy at the cost of [national] security. In the course of his argument he makes two points which are useful in thinking about protecting privacy on the internet.He quotes South Carolina law professor Ann Bartow as saying,

Amazon likens what Silk is doing to the role of an Internet Service Provider, which seems reasonable, but since regulators are getting wary of how ISP's leverage the data that passes through them, Amazon may not always enjoy that association.EPIC (Electronic Privacy Information Center) has sent a letter to the FTC requesting an investigation of Facebook's Open Graph changes and the new Timeline.I'm not optimistic about the response. Depending on how the default privacy settings are configured, Open Graph may fall victim to another "Facebook ruined my diamond ring surprise by advertising it on my behalf" kerfuffle, which will result in a half-hearted apology from Zuckerberg and some shuffling around of checkboxes and radio buttons. The watchdogs aren’t as used to keeping tabs on Amazon, which has done a better job of meeting expectations around its use of customer data, so Silk may provoke a bit more soul-searching.But I doubt it. In an excerpt from his book “Nothing to Hide: The False Tradeoff Between Privacy and Security” published in the Chronicle of Higher Education earlier this year, Daniel J. Solove does a great job of explaining why we have trouble protecting individual privacy at the cost of [national] security. In the course of his argument he makes two points which are useful in thinking about protecting privacy on the internet.He quotes South Carolina law professor Ann Bartow as saying,

There are not enough privacy “dead bodies” for privacy to be weighed against other harms.

There's plenty of media chatter monitoring the decay of personal privacy online, but the conversations have been largely theoretical, the stuff of political and social theory. We have yet to have an event that crystallizes the conversation into a debate of moral rights and wrongs.

Whatevers, See No Evil, and the OMG!'s

At one end of the "privacy theory" debate, there are the Whatevers, whose blasé battle cry of “No one cares about privacy any more,” is bizarrely intended to be reassuring. At the other end are the OMG!'s, who only speak of data collection and online privacy in terms of degrees of personal violation, which equally bizarrely has the effect of inducing public equanimity in the face of "fresh violations."However, as per usual, the majority of people exist in the middle where so long as they "See no evil and Hear no evil," privacy is a tab in the Settings dialog, not a civil liberties issue. Believe it or not this attitude hampers both companies trying to get more information out of their users AND civil liberties advocates who desperately want the public to "wake up" to what's happening. Recently, privacy lost to free speech – but more on that in a minute.When you look into most of the privacy concerns that are raised about legitimate web sites and software, (not viruses, phishing or other malicious efforts) they usually have to do with fairly mundane personal information. Your name or address being disclosed inadvertently. Embarrassing photos. Terms you search for. The web sites you visit. Public records digitized and put on the web.The most legally harmful examples involve identity theft, which while not unrelated to internet privacy, falls squarely in the well-understood territory of criminal activity. What's less clear is what's wrong with "legitimate actors" such as Google and Facebook and what they're doing with our data.Which brings us a second point from Solove:

“Legal and policy solutions focus too much on the problems under the Orwellian metaphor—those of surveillance—and aren't adequately addressing the Kafkaesque problems—those of information processing.”

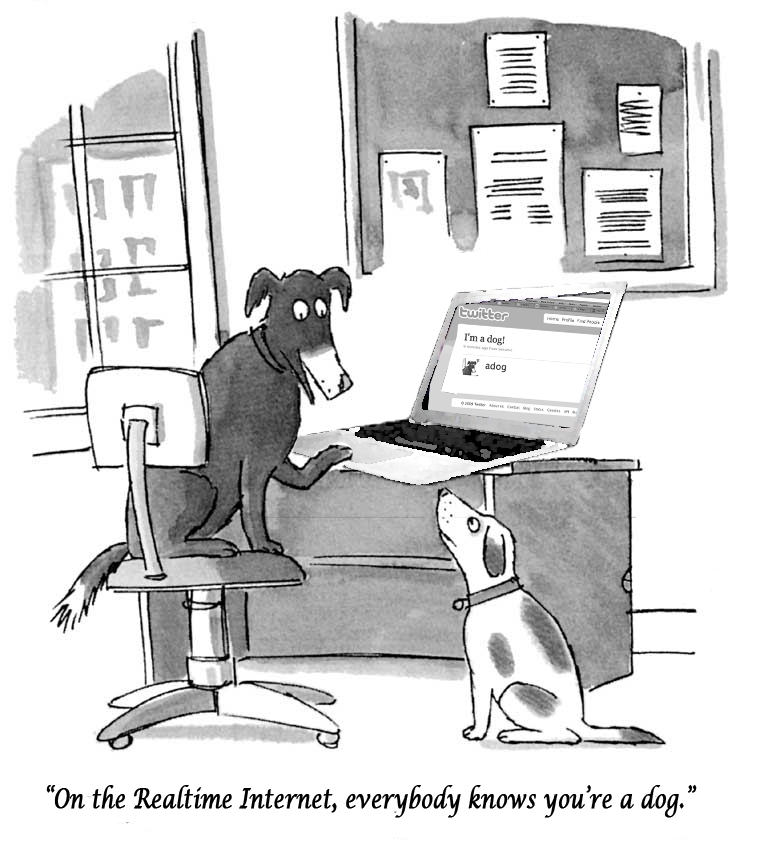

In other words, who cares if the servers at Google "know" what I'm up to. We can't as yet really even understand what it means for a computer to "know" something about human activity. Instead, the real question is what is Google (the company, comprised of human beings) deciding to do with this data?

What are People deciding to do with data?

By and large, the data collection that happens on the internet today is feeding into one flavor or another of “targeted advertising.” Loosely, that means showing you advertisements that are intended for an individual with some of your traits, based on information that has been collected about you. A male. A parent. A music lover. The changes to Facebook's Open Graph will create a targeting field day. Which, on some level is a perfectly reasonable and predictable extension of age-old advertising and marketing practices.

In theory, advertising provides social value in bridging information gaps about useful, valuable products; data-driven services like Facebook, Google and Amazon are simply providing the technical muscle to close that gap.

However, Open Graph, Silk and other data rich services place us at the top of a very long and shallow slide down to a much darker side of information processing, which has nothing to do with the processing, but about manipulation and balance of power. And it's the very length and gentle slope of that slide that make it almost impossible for us to talk about what's really going wrong, and makes it even somewhat pleasant to ride down on it. (Yes, I'm making a slippery slide argument.)

At the top of the slide, are issues of values and dehumanization.

Recently employers have been making use of credit checks to screen potential candidates, automatically rejecting applicants with low credit scores. Perhaps this is an ingenious, if crude, way to quickly filter down a flood of job applicants. While its utility remains to be proven, it's with good reason that we pause to consider the unintended consequences of such a policy. In many areas, we have often chosen to supplement "objective," statistical evaluations with more humanist, subjective techniques (the college application process being one notable example). We are also a society that likes to believe in second chances.

A bit further down the slide, there are questions of fairness.

Credit card companies have been using purchase histories as a way to decide who to push to pay their debt in full and who to strike a deal with. In other words, they're figuring out who will be susceptible to "being guilted" and who's just going to give them the finger when they call. This is a truly ingenious and effective way to lower the cost and increase the effectiveness of debt collection efforts. But is it fair to debtors that some people "get a deal" and others don't? Surely, such inequalities have always existed. At the very least, it's problematic that such practices are happening behind closed doors with little to no public oversight, all in the name of protecting individual privacy.

Finally, there are issues of manipulation where information about you is used to get you to do things you don't actually want to do.

The fast food industry has been micro-engineering the taste, smell and texture of their food products to induce a very real food addiction in the human brain. Surely, this is where online behavioral data-mining is headed, amplified by the power to deliver custom-tailored experiences to individuals.

But it's just the Same-Old, Same-Old

This last scenario sounds bad, but isn't this simply more of the same old advertising techniques we love to hate? Is there a bright line test we can apply so we know when we've "crossed the line" over into manipulation and lies?

Drawing Lines

Clearly the ethics of data use and manipulation in advertising is something we have been struggling with for a long time and something we will continue to struggle with, probably forever. However, some lines have been drawn, even if they're not very clear.While the original defining study on subliminal advertising has since been invalidated, when it was first publicized, the idea of messages being delivered subliminally into people’s minds was broadly condemned. In a world of imperfect definitions of “truth in advertising” it was immediately clear to the public that subliminal messaging (if it could be done) crossed the line into pure manipulation, and that was unacceptable. It was quickly banned by the UK, Australia and the American Networks and the National Association of Broadcasters.

Thought Experiment: If we were to impose a "code of ethics" on data practitioners, what would it look like?

Here's a real-world, data-driven scenario:

- Pharmacies sell customer information to drug companies so that they can identify doctors who will be most "receptive" to their marketing efforts.

- Drug companies spend $1 billion a year advertising online to encourage individuals to “ask your doctor about [insert your favorite drug here]” with vague happy-people-in-sunshine imagery.

- Drug companies employ 90,000 salespeople (in 2005) to visit the best target doctors and sway them to their brands.

Vermont passed a law outlawing the use of the pharmacy data without patient consent on the grounds of individual privacy. Then, this past June 23rd, the supreme court decided it was a free-speech problem and struck down the Vermont law.

Privacy as an argument for hemming in questionable data use will probably continue to fail.

The trouble again is that theoretical privacy harms are weak sauce in comparison to data as a way to "bridge information gaps." If we shut down use of this data on the basis of privacy, that prevents the government from using the same data to prioritize distribution of vaccines to clinics in high-risk areas.Ah, but here we've stumbled on the real problem…

Let's shift the conversation from Privacy to Access

Innovative health care cost reduction schemes like care management are starved for data. Privacy concerns about broad, timely analysis of tax returns have prevented effective policy evaluation. Municipalities negotiating with corporations lack data to make difficult economic stimulus decisions. Meanwhile private companies are drowning in data that they are barely scratching the surface of.At the risk of sounding like a broken record, since we have written volumes about this already:

- The problem does not lie in the mere fact that data is collected, but in how it is secured and processed and in who's interest it is deployed.

- Your activity on the internet, captured in increasingly granular detail is enormously valuable, and can be mined for a broad range of uses that as a society we may or may not approve of.

- Privacy is an ineffective weapon to wield against the dark side of data use and instead, we should focus our efforts on (1) regulations that require companies to be more transparent about how they're using data and (2) making personal data into a public resource that is in the hands of many.